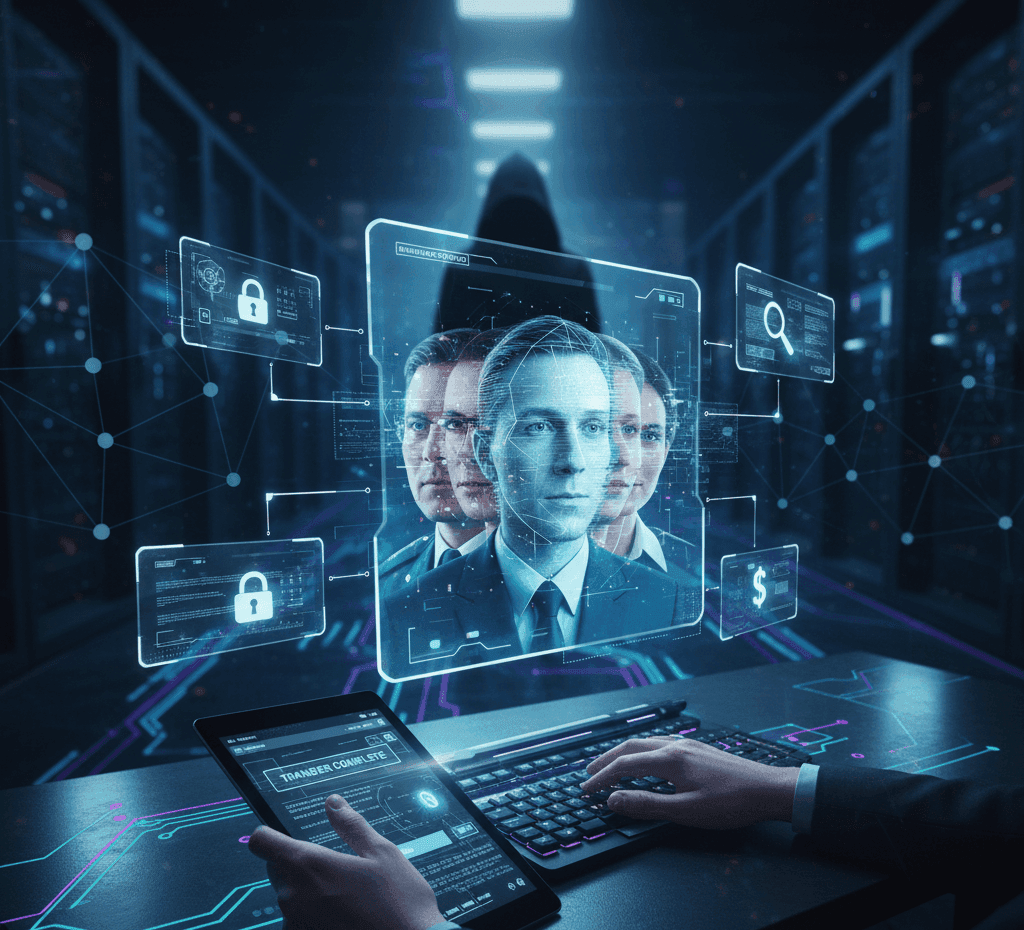

Deepfake Scams in 2025: How AI-Generated Identities Are Fueling Fraud and Espionage

Internet Trends

In recent years, artificial intelligence has advanced at lightning speed, giving us powerful tools in content creation, automation, and communication. But alongside these innovations, new dangers have emerged—one of the most alarming being deepfake scams.

In 2025, deepfakes have become more convincing than ever. These AI-generated videos, voices, and images are no longer confined to entertainment or experimental tech projects. Instead, cybercriminals and even state-backed espionage groups are weaponizing them to trick individuals, businesses, and governments.

How Deepfake Scams Work

A deepfake uses machine learning models to mimic the appearance, voice, and mannerisms of real people. While early versions were often flawed and easy to detect, today’s deepfakes can look and sound nearly identical to authentic recordings.

This makes them a dangerous tool for scams such as:

- Business email compromise (BEC): Fraudsters use cloned voices to impersonate executives and trick employees into wiring funds.

- Romance and social media scams: Fake profiles use AI-generated faces and voices to gain trust, extract money, or steal personal information.

- Political manipulation: False statements and staged videos spread misinformation at scale, influencing public opinion.

- Corporate espionage: Rivals or hackers use deepfakes to gain insider access or discredit key individuals.

Why 2025 Is a Turning Point

Several factors make 2025 different from past years:

- Accessibility of AI tools: Open-source models and affordable platforms mean nearly anyone can generate convincing fakes.

- Improved realism: New algorithms blur the line between real and synthetic content, making detection harder.

- Scale of attacks: Instead of targeting a handful of victims, scammers can now deploy thousands of deepfakes across email, video calls, and social platforms.

According to cybersecurity analysts, deepfake-related fraud is expected to cost businesses billions globally this year.

Protecting Yourself from Deepfake Scams

While technology is advancing rapidly, individuals and businesses can take proactive steps to reduce risks:

- Verify through multiple channels. Don’t rely on a single call or video for confirmation—follow up with secondary methods like in-person meetings or encrypted messages.

- Adopt AI-detection tools. Cybersecurity companies are developing algorithms that analyze inconsistencies in video and audio.

- Educate employees and users. Awareness training is key, especially for finance teams and high-level executives.

- Watch for context clues. Deepfakes may look perfect but can still reveal odd lighting, unnatural blinking, or mismatched backgrounds.

The challenge is ongoing: as detection methods improve, so do the tools that scammers use to bypass them.

Want to explore more about digital risks in 2025? You might also like

Up Next

Pop Culture

Barron Trump at NYU: Navigating Student Life Under the Spotlight

Up Next

Internet Trends

Google Launches Mixboard: An AI-Powered Mood Board App Challenging Pinterest in 2025